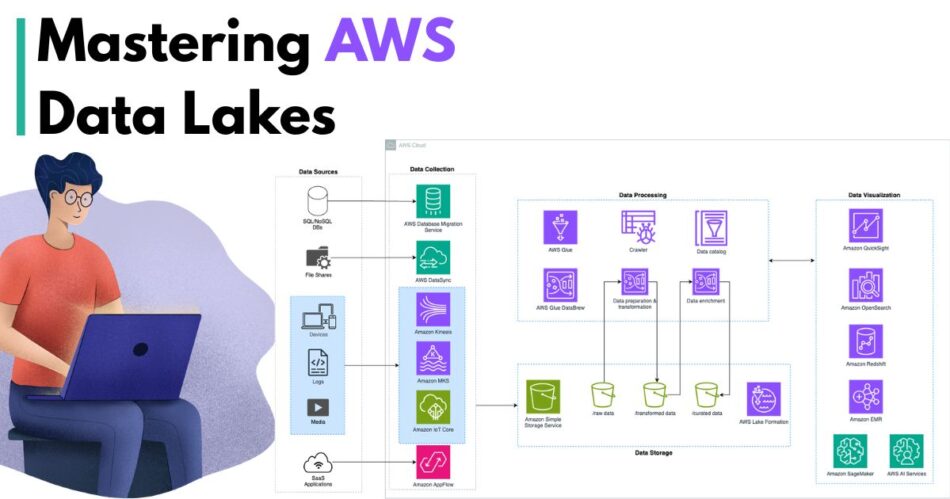

AWS Data Analytics Services are essential for businesses dealing with vast volumes of structured and unstructured data. As global data generation continues to rise, organizations must adopt scalable solutions to manage, store, and analyze data efficiently. AWS offers a comprehensive suite of services that enable seamless setup and management of a data lake tailored to enterprise needs.

Step 1: Define Data Lake Requirements

- Identify all data types: structured, semi-structured, and unstructured.

- Estimate data volume for both current usage and future growth.

- Establish compliance and security requirements (e.g., GDPR, HIPAA).

- Clarify business use cases: business intelligence, machine learning, real-time analytics.

- Understand access needs for different teams and roles.

Step 2: Set Up an S3 Bucket Structure

- Use Amazon S3 as the central storage for your data lake.

- Enable versioning and encryption to secure your data.

- Create a logical folder hierarchy (e.g., raw, processed, curated).

- Use lifecycle policies to manage long-term storage costs.

- Assign naming conventions for better organization and retrieval.

Step 3: Configure AWS Lake Formation

- Register S3 buckets in Lake Formation for data governance.

- Define databases and tables to structure metadata.

- Set permissions using AWS Identity and Access Management (IAM).

- Apply fine-grained access control for users and services.

- Integrate with AWS Glue for seamless metadata cataloging.

Step 4: Ingest Data from Multiple Sources

- Use AWS Glue or AWS DataSync to migrate on-premise data.

- Integrate Amazon Kinesis for streaming real-time data.

- Use AWS Transfer Family for legacy system ingestion via SFTP/FTP.

- Leverage third-party APIs to pull data from external sources.

- Tag incoming data with source and timestamp metadata.

Step 5: Catalog Data with AWS Glue

- Create a Glue Data Catalog to store metadata for datasets.

- Set up Glue crawlers to scan S3 directories for schema discovery.

- Schedule crawlers to update catalog regularly as new data arrives.

- Use the catalog with Athena, Redshift Spectrum, and QuickSight.

- Ensure schema consistency across datasets for compatibility.

Step 6: Apply Data Transformation and ETL

- Use AWS Glue Jobs or EMR Spark for data transformation.

- Remove duplicates, clean errors, and standardize formats.

- Validate data accuracy and completeness.

- Move transformed data to the curated zone in S3.

- Apply logging to track ETL job performance and results.

Step 7: Enable Querying with Amazon Athena

- Connect Athena to the AWS Glue Data Catalog.

- Run SQL-based queries on data stored in S3.

- Organize datasets using partitions for faster querying.

- Convert data into columnar formats like Parquet or ORC.

- Save and manage commonly used queries for teams.

Step 8: Secure the Data Lake

- Use IAM roles and S3 bucket policies for access control.

- Enable AWS KMS for encryption at rest and in transit.

- Implement Lake Formation permissions at table and column levels.

- Monitor data access and operations using AWS CloudTrail.

- Conduct periodic audits to ensure compliance and security.

Step 9: Visualize Data with Amazon QuickSight

- Connect QuickSight to Athena or Redshift for data access.

- Build dashboards, KPIs, and custom visuals.

- Share dashboards with business teams securely.

- Embed visualizations into internal or external applications.

- Use filters, drill-downs, and parameters for interactivity.

Step 10: Automate and Monitor Workflows

- Use AWS Step Functions to orchestrate data workflows.

- Schedule Glue Jobs and crawlers using time-based triggers.

- Monitor data pipelines with Amazon CloudWatch.

- Set up alerts for job failures and performance degradation.

- Automate notifications and recovery steps for critical tasks.

Conclusion

- An AWS Data Lake is essential for storing and analyzing large-scale data.

- AWS Data Analytics Services streamline the setup and management process.

- Each service plays a distinct role: storage (S3), cataloging (Glue), governance (Lake Formation), querying (Athena), and visualization (QuickSight).

- Following a structured 10-step approach ensures a secure, scalable, and efficient data lake.

- As data continues to grow in volume and variety, a well-managed AWS Data Lake becomes a cornerstone for informed decision-making.

Key AWS Services Used in a Data Lake Setup

- Amazon S3: Centralized storage layer.

- AWS Lake Formation: Governance, access control, and setup.

- AWS Glue: Metadata management and ETL.

- Amazon Athena: SQL-based query engine.

- Amazon Kinesis: Real-time data ingestion.

- Amazon QuickSight: Data visualization and dashboarding.

- AWS CloudTrail: Logging and audit monitoring.

By implementing these steps, businesses can unlock the full potential of their data using AWS Data Analytics Services, transforming raw data into actionable intelligence.

Frequently Asked Questions (FAQs)

1. What is an AWS Data Lake and how is it different from a traditional data warehouse?

A Data Lake in AWS is a centralized storage system built on Amazon S3 that allows organizations to store structured, semi-structured, and unstructured data at any scale. Unlike traditional data warehouses that require data to be cleaned and structured before storing, a data lake lets you ingest raw data in its original format.

- Data Lake: Ideal for storing vast, diverse data types for multiple analytics use cases (BI, ML, real-time).

- Data Warehouse: Optimized for structured data, fast SQL queries, and predefined schemas.

With AWS Data Analytics Services, you can combine a data lake (S3) with tools like Glue (ETL), Athena (queries), and QuickSight (visualization) to build a comprehensive analytics platform.

2. Why should a business consider AWS Data Analytics Services over other cloud providers for setting up a data lake?

AWS Data Analytics Services offer one of the most mature and comprehensive ecosystems for building data lakes. Benefits include:

- Scalability: S3 can store exabytes of data across regions.

- Cost-Effectiveness: Pay only for what you use, with tiered storage.

- Integrated Services: Tight integration between S3, Glue, Athena, Lake Formation, and QuickSight.

- Security & Compliance: Tools like KMS, IAM, and Lake Formation provide fine-grained access control.

- Global Availability: Available in more regions than any other provider.

These factors make AWS a preferred choice for enterprises with complex and large-scale analytics needs.

3. How do AWS Glue and Lake Formation work together in a data lake setup?

AWS Glue and AWS Lake Formation are complementary services that enhance metadata management and governance in a data lake:

- AWS Glue handles ETL (Extract, Transform, Load) processes and metadata cataloging. It uses crawlers to scan data and update the Glue Data Catalog.

- Lake Formation builds on Glue’s capabilities by adding security, access controls, and automated setup of data lakes.

Together, they allow you to:

- Automatically discover and catalog datasets.

- Define who can access specific data (down to the column level).

- Enforce governance policies while still enabling analytics tools like Athena and QuickSight.

This synergy simplifies data preparation while ensuring governance and compliance.

4. What are the common challenges in setting up an AWS Data Lake and how can they be avoided?

While setting up a data lake using AWS Data Analytics Services, organizations may face the following challenges:

- Poor Folder Structure: Lack of a logical hierarchy can make data hard to manage. Use clear layers: raw → processed → curated.

- Metadata Inconsistency: Not using Glue crawlers consistently can lead to outdated catalogs.

- Insufficient Security: Failing to apply proper IAM roles, KMS encryption, and Lake Formation policies can risk data leaks.

- Inefficient Querying: Not using partitioning or columnar formats like Parquet can increase query time and cost.

Solution: Follow a structured approach, automate key workflows, and apply best practices across storage, governance, transformation, and querying.

5. Can AWS Data Analytics Services be used for real-time analytics in addition to batch processing?

Yes, AWS Data Analytics Services support both real-time and batch processing. For real-time use cases, Amazon Kinesis is commonly used:

- Amazon Kinesis Data Streams: Ingests data in real time from IoT devices, logs, and applications.

- Amazon Kinesis Data Firehose: Delivers streaming data to S3, Redshift, or Elasticsearch with minimal setup.

- Amazon Athena and QuickSight: Can query and visualize this data almost instantly once ingested.

This real-time capability is ideal for fraud detection, operational monitoring, and dynamic dashboards, complementing the batch processing done through Glue and EMR.