Introduction

AI models often fail not because of bad algorithms, but because of bad data. Without the right context, an AI system is like a student handed a textbook in an unfamiliar language.

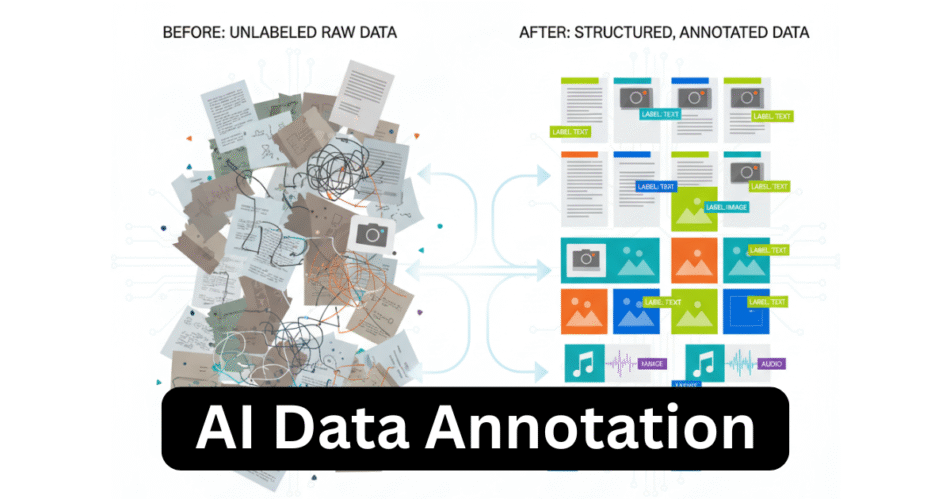

That’s the problem AI data annotation solves. By labeling and structuring raw data, annotation gives AI the context it needs to learn, recognize patterns, and make accurate predictions.

The Problem: Why AI Struggles Without Annotation

-

Raw data is unstructured. Machines can’t distinguish between an image of a dog and a cat without labels.

-

Context is missing. A sentence like “I love Apple” could refer to fruit or a company.

-

Scale makes errors worse. Small inaccuracies in training data multiply when models run at scale.

Result? Poor predictions, biased outcomes, and unreliable AI systems.

The Solution: Data Annotation

Data annotation provides clarity by attaching labels, tags, or metadata to raw inputs.

-

Images → bounding boxes, polygons, or pixel labels

-

Text → entities, emotions, or intents

-

Audio → transcripts, speaker IDs, or tone labels

-

Video → object tracking, scene segmentation, or event tagging

Annotation turns “raw noise” into “usable knowledge.”

Key Techniques in AI Data Annotation

Image Annotation

-

Bounding boxes for detecting cars, people, and objects

-

Keypoints for facial recognition and gesture tracking

-

Pixel-level segmentation for medical imaging

Text Annotation

-

Entity recognition for names, products, and places

-

Sentiment tagging for reviews and social media

-

Intent classification for virtual assistants

Audio Annotation

-

Speech-to-text transcription

-

Speaker diarization (“who said what”)

-

Emotion recognition through tone and pitch

Video Annotation

-

Multi-frame object tracking for self-driving cars

-

Activity recognition for surveillance

-

Event segmentation in sports or retail

Common Challenges (and How to Overcome Them)

| Challenge | Impact | Solution |

|---|---|---|

| High volume | Delays in training models | Semi-automated annotation with HITL |

| Cost of labor | Expensive manual labeling | Outsourcing to specialized providers |

| Inconsistent quality | Model errors, unreliable outputs | Clear guidelines + multi-stage QA |

| Bias in labels | Skewed predictions | Diverse annotator teams + bias checks |

| Data sensitivity | Compliance risks | Secure platforms with GDPR/HIPAA policies |

Business Applications

-

Healthcare: Annotated MRIs and X-rays help detect early signs of disease.

-

Automotive: Self-driving cars rely on annotated road and traffic data.

-

Finance: Fraud detection models need labeled transaction data.

-

Retail: Product tagging powers recommendation engines and search.

-

Security: Annotated video enables smarter surveillance systems.

Why Humans Still Matter

Even as annotation tools become more advanced, human involvement remains critical:

-

Contextual understanding of ambiguous cases

-

Bias reduction by applying human judgment

-

Quality assurance in edge cases where AI falls short

This human-in-the-loop (HITL) approach balances automation with expertise.

In-House vs. Outsourced Annotation

-

In-House Teams – Greater control, but costly and harder to scale.

-

Outsourcing Partners – Cost-effective, faster, and easier to expand globally.

-

Hybrid Models – In-house oversight with outsourced execution.

Future Outlook

-

AI-assisted annotation will cut down repetitive work.

-

Synthetic data will reduce dependence on manual labeling.

-

Domain-specific expertise will shape annotation for healthcare, finance, and robotics.

-

Ethical annotation will be a must, addressing fairness, inclusivity, and privacy.

FAQs

Q1. Why can’t AI learn without annotation?

Because raw data lacks context. Annotation provides meaning.

Q2. Can annotation be automated completely?

Not fully. Automation helps, but human review ensures accuracy.

Q3. What industries benefit most from annotation?

Healthcare, automotive, retail, finance, and defense.

Q4. How do companies reduce annotation costs?

By outsourcing, using semi-automated tools, and applying HITL.

Q5. What’s the biggest risk in annotation?

Bias in labeling, which can lead to unfair or inaccurate AI outcomes.

Conclusion

AI data annotation may not grab headlines, but it is the silent driver of AI success. Without it, algorithms cannot learn, adapt, or perform reliably.

For businesses, the choice is clear: invest in annotation strategies—whether through in-house teams, outsourcing, or hybrid models—to unlock AI’s full potential.